The risks of artificial intelligence and the response of Korean civil society

SOURCE: The risks of artificial intelligence and the response of Korean civil society

AUTHORED BY: Byoung-il Oh

ORGANIZATION: Korean Progressive Network Jinbonet

WEBSITE: https://www.jinbo.net

With the launch of ChatGPT at the end of 2022, people around the world realised the arrival of the artificial intelligence (AI) era, and South Korea was no exception. At the same time, 2023 was also a year of global awareness of the need to control the risks of AI. In November 2023, at the AI Safety Summit in Bletchley Park, UK, legislators in the European Union (EU) agreed on an AI act, and the Biden administration in the US issued an executive order to regulate AI. In the coming years, discussions on AI regulation in various countries are bound to influence each other. Korean civil society also believes that it is necessary to enact a law to stem the risks of AI, but the bill currently being pushed by the Korean government and the National Assembly has been met with opposition from civil society because, in the name of fostering Korea’s own AI industry, the proposed bill lacks the proper regulatory framework.

The risks of AI and the case of South Korea

South Koreans have already embraced AI in their lives – right from chatbots to translation to recruitment and platform algorithms, a variety of AI-powered services have already been introduced into our society. While AI can provide efficiency and convenience in work and life, its development and use can also pose a number of risks, including threats to safety and violations of human rights. The risks commonly cited are invasion of privacy, discriminatory decisions, lack of accountability due to opacity, and sophisticated surveillance, which, when coupled with unequal power relations in society, can perpetuate inequities and discriminatory structures and threaten democracy.

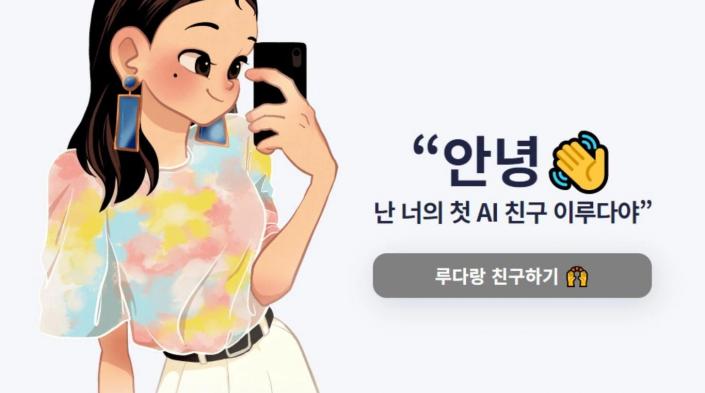

Jinbonet recently published a research report, produced with the support of an APC subgrant, on controversial AI-related cases in South Korea. And indeed there have been several that have raised concerns. Lee Luda, a chatbot launched in December 2020, was criticised for its hate speech against vulnerable groups such as women, people with disabilities, LGBTQIA+communities and Black people, and was punished by the Personal Information Protection Commission (PIPC) for violating the Personal Information Protection Act (PIPA). dIn addition, the use of AI during recruitment processes has increased across both public and private companies in recent years, as corruption in recruitment in public institutions has become a social issue. Also, with remote work becoming the norm during the COVID-19 pandemic, institutions have not properly verified the risks or performance AI recruitment systems and have no data in this regard. It also remains an open question whether private companies’ AI-driven recruitment works fairly without discrimination based on gender, region, education, etc. The Ministry of Justice and Ministry of Science and ICT sparked a huge controversy when they provided facial recognition data of 170 million Koreans and foreigners to a private company without consent in the guise of upgrading the airport immigration system. Civil society groups suspect that public authorities provided such personal data to favour private tech companies.

There is suspicion that big tech platforms use their algorithms to abuse this data to gain advantage over their competitors. Kakao, which provides KakaoTalk, a messenger app used by almost all Koreans, used its dominance to take over the taxi market. It was fined by the Korean Fair Trade Commission (KFTC) in February 2023 after it was found to have manipulated its AI dispatching algorithm in favour of its taxis. Similarly, another Korean big tech company, Naver, was fined by the KFTC in 2020 for manipulating shopping search algorithms to favour its own products. Korean civil society is also concerned about the use of AI systems for state surveillance. While the use of AI systems by intelligence and investigative agencies has not yet become controversial, the Korean government has invested in R&D for so-called “smart policing”, and, given that South Korea has one of the highest numbers of CCTVs installed globally, there are concerns that surveillance through intelligent CCTVs could be introduced.

AI regulation and civil society response

While existing regulations such as the PIPA and the Fair Trade Act can be applied to AI systems, there is no specific legislation to regulate AI in South Korea as a whole. For example, as in the case of AI recruitment systems, there are no requirements for public institutions to develop their own AI systems or to adopt private sector AI systems to ensure accountability. There is also no obligation to take measures to proactively control problems with AI, such as verifying data bias, or to reactively track the source of problems, such as record-keeping.

The Korean government has been promoting the development of the AI industry as a national strategy for several years. The National Strategy for Artificial Intelligence (AI) was released on 17 December 2019 by all ministries, including the Ministry of Science and ICT. As the slogan “Beyond IT powerhouse to AI powerhouse” shows, the strategy is an expression of the government’s policy to understand AI as a civilisational change and use it as an opportunity to develop the economy and solve social problems. However, this strategy is based on an “allow first, regulate later” approach to AI regulation. Therefore, the policy is mainly based on the establishment of an AI ethical code that can serve as a guide for self-regulation of companies.

Civil society organisations (CSOs) in South Korea have also been making their voices heard on AI-related policies for several years. On 24 May 2021, 120 CSOs released their manifesto, Civil Society Declaration on Demand for an AI Policy that Ensures Human Rights, Safety, and Democracy. Calling for ensuring human rights and legal compliance of AI and the legislation of the AI act, the CSOs suggested that the act should include

- A national supervision system for AI

- Transparency and participation

- AI risk assessment and control, and

- A rights redress mechanism.

In cooperation with the National Human Rights Commission (NHRC), the CSOs have also urged the NHRC to play an active role in regulating AI from a human rights perspective. On 11 May 2022, the NHRC released its road map, Human Rights Guidelines on the Development and Use of Artificial Intelligence, to prevent any human rights violations and discrimination that may occur in the process of developing and using AI. It plans to release an AI human rights impact assessment tool in 2024. Activists of Jinbonet participated in research work to establish human rights guidelines for the NHRC and to develop a human rights impact assessment tool.

The Korean government, particularly the Ministry of Science and ICT, which is the lead ministry, is also pushing for legislation to regulate AI. In early 2023, the relevant standing committee of the National Assembly discussed an AI bill that was a merger of bills proposed by several lawmakers, and also consulted by the Ministry of Science and ICT. However, while the bill aims to establish a basic law on AI, it is mainly focused on fostering the industry. It advocates the principle of “allow first, regulate later”, but does not include any obligations or penalties for providers to control the risks of AI, nor any remedies for those who suffer harm from AI.

Korean civil society agrees that laws are needed to regulate AI and is vehemently opposed to the AI bill currently being debated in the National Assembly. Instead, Korean CSOs have been discussing their own proposal for an AI bill in 2023. Led by digital rights groups, including Jinbonet, they developed a draft and received inputs from a wider panel of CSO activists and experts at the civil society forum, Artificial Intelligence and The Role of Civil Society for Human Rights and Safety, held on 22 November 2023 and funded by APC. They intend to propose a civil society version of the AI bill to the National Assembly.

Next steps

The AI legislation being debated in Europe has also influenced the Korean civil society. It examined the positions of its European counterpart on the AI bill, the positions of the European Data Protection Board (EDPB) and European Data Protection Supervisor (EDPS), the negotiating position of the European Parliament, etc. Although the European AI Bill, which was agreed at the end of 2023, is a step backward compared to civil society’s position and the European Parliament’s position, it contains a number of references at the global level. Of course, when discussing AI legislation in Korea, it is necessary to consider the different legal systems and social conditions apropos of Europe and Korea.

Korean industry, pro-industry experts and conservative media oppose AI regulation. They argue that the European Union is trying to regulate AI because it is lagging behind, and that there is no need to rush to regulate AI, in order to foster Korea’s AI industry. They have used the same logic for privacy regulation and big tech regulation. South Korea’s own big tech companies such as Naver and Kakao are also developing hyperscale AI. Therefore, there is a very strong public opinion in favour of domestic big tech and AI industries .

South Korea is holding its general election in April 2024. Any bills that fail to pass in the current National Assembly will be abandoned when the new National Assembly is constituted in June 2024. It is unlikely that AI bills will be fully discussed in the current National Assembly. Korean civil society intends to ask the new National Assembly to introduce a civil society AI bill and urge it to pass legislation that will actually regulate AI. To build public opinion for the passage of the AI bill, Korean civil society, including Jinbonet, is set on identifying and publicising more instances of the dangers of AI.

* Image: Lee Luda chatbot via Scatter Lab